Fix Indexing Issues: Tips and Solutions for Your Website

Your website’s visibility in search results depends on one critical step: successful indexing. Without it, even the most optimized pages remain invisible to users, wasting SEO efforts and stunting growth1. Common problems like missing sitemaps, technical errors, or poor mobile compatibility often block search engines from cataloging content2.

Unindexed pages don’t just hurt rankings—they directly reduce organic traffic and revenue opportunities. For example, redirect loops or crawl budget mismanagement can leave entire sections of a site excluded from search results2. Tools like Google Search Console provide real-time insights to identify and resolve these gaps21.

Technical oversights also carry risks beyond poor performance. Sites with broken code or suspicious elements may face penalties, including manual actions or de-indexing1. Prioritizing mobile-friendly design and clean site architecture helps avoid these pitfalls while boosting user experience2.

This guide explores practical strategies to address indexing challenges. You’ll learn how to audit your site, implement fixes like canonical tags, and leverage SEO tools to maintain long-term visibility.

Key Takeaways

- Indexing determines whether search engines can display your pages in results.

- Technical errors like redirect loops or poor mobile optimization block indexing2.

- Unresolved issues may lead to penalties, including de-indexing1.

- Google Search Console is critical for monitoring and troubleshooting21.

- Mobile-friendly design and structured content improve indexing success2.

Understanding the Basics of Website Indexing

Website indexing is the backbone of online visibility. When search engines like Google analyze and store your pages, they become eligible to appear in search results3. Without this process, even valuable content remains hidden from users.

The journey begins with discovery. Crawlers follow links or use XML sitemaps to map your site’s structure34. These sitemaps act as roadmaps, highlighting priority pages and ensuring no critical content gets overlooked.

Next, the robots.txt file guides crawlers by specifying accessible areas. Misconfigurations here can accidentally block entire sections4. Google’s guidelines emphasize crawlability—fixing errors like redirect chains or server timeouts ensures smooth indexing4.

A well-structured sitemap focuses crawlers on canonical, high-quality pages. This prevents wasted crawl budget on duplicates or low-value URLs4. Pair this with clear internal links for maximum efficiency.

Tools like Google Search Console offer granular insights. Their index coverage reports reveal which pages are included, excluded, or facing errors5. By combining technical precision with strategic content, you lay the groundwork for consistent search performance.

Mastering these fundamentals prepares you to tackle advanced diagnostics. The next sections will explore actionable methods to audit and refine your approach.

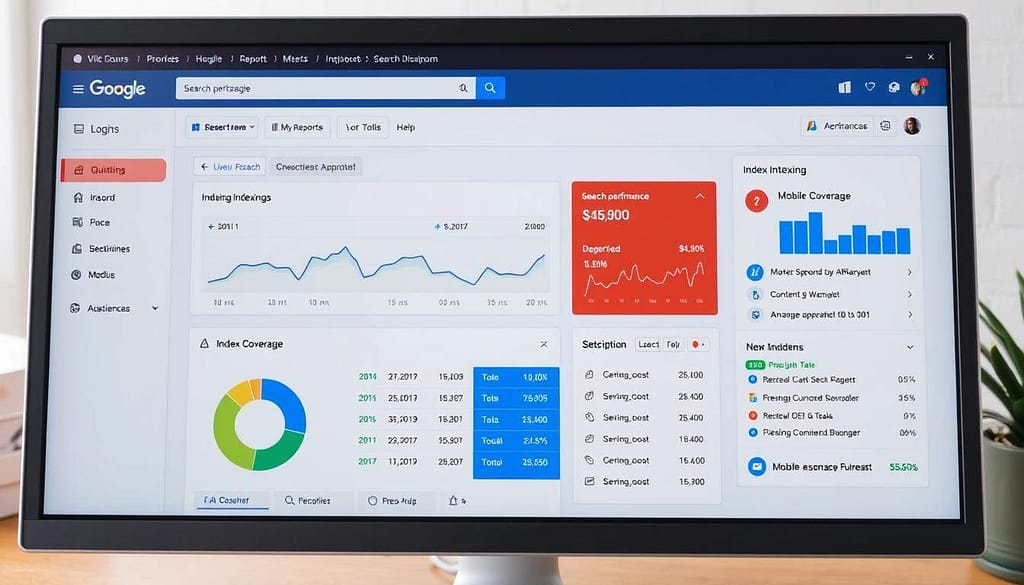

Diagnosing Fix Indexing Issues with Google Search Console

Google Search Console serves as a critical diagnostic tool for identifying why pages struggle to appear in search results. Start by selecting your property and clicking “Coverage” under the Index menu6. This reveals the health of your site’s visibility through four key categories.

Leveraging the Index Coverage Report

The report divides URLs into Valid, Valid with warnings, Excluded, and Error statuses. Valid pages are fully indexed, while warnings highlight issues like thin content or slow loading6. Excluded URLs often face robots.txt blocks or noindex tags—check your txt file for accidental restrictions7.

Interpreting Valid, Excluded, and Error Statuses

Error statuses signal crawl failures, such as 404s or server timeouts. Click any error line to inspect affected URLs. Use the URL Inspection Tool to view crawl details and submit a request for re-indexing after fixing issues67.

Internal links also influence how search engines prioritize pages. A well-connected structure guides crawlers to high-value content, reducing wasted crawl budget7. Regularly audit your sitemap and linking strategy to maintain seamless indexing.

Recognizing Common Indexing Problems and Their Impact

Struggling to rank often starts with overlooked technical barriers. Pages blocked by robots.txt files or tagged noindex vanish from search results, even if they contain valuable information8. For example, accidental disallow rules in robots.txt can hide entire product categories from crawlers.

Duplicate content ranks among the top reasons for poor visibility. When Google finds identical versions across many pages, it may ignore all but one—even if canonical tags exist9. This wastes crawl budget and dilutes page authority.

| Problem | Cause | Solution |

|---|---|---|

| Missing Pages | Robots.txt blocks | Update disallow rules |

| Soft 404s | Thin content | Enhance page depth |

| Orphaned URLs | No internal links | Improve site navigation |

| Crawl Waste | Redirect chains | Simplify URL structure |

Poor site architecture amplifies these challenges. Orphaned pages—those without internal links—often go undiscovered, leaving 15-30% of content unindexed in large sites9. Tools like Screaming Frog Spider expose these gaps quickly.

Server errors and crawl limits compound the damage. Sites with frequent 5xx codes or excessive redirects drain resources, delaying new page discovery8. Regular audits using Google Search Console prevent these pitfalls from eroding rankings long-term.

Identifying and Handling 404 and Soft 404 Errors

Broken URLs create roadblocks for users and search engines. A 404 error occurs when a page no longer exists, but a soft 404 is trickier—it shows a missing page while falsely returning a “200 OK” status1011. Both types drain crawl resources and harm rankings if left unresolved.

Spotting Broken URLs and Redirect Loops

Use Google Search Console’s Coverage Report to find URLs labeled “Error” or “Excluded.” Redirect chains that loop endlessly often trigger soft 404s, confusing crawlers11. Tools like Screaming Frog can map redirect paths and highlight unresolved links.

| Error Type | Cause | Solution |

|---|---|---|

| Standard 404 | Deleted page | Redirect to relevant content |

| Soft 404 | Empty/irrelevant page | Add content or return true 404 |

| Redirect Loop | Chain of 3+ redirects | Simplify to single redirect |

Implementing User-Friendly 404 Pages

Custom error pages should guide visitors to working sections of your site. Include search bars, popular links, and clear messaging. For pages you want accessible but not indexed—like archived content—apply the noindex tag11.

Regularly update sitemaps to remove dead links. As highlighted in this guide on soft 404 resolution, combining technical fixes with smart navigation preserves crawl efficiency and user trust10.

Managing Access Restrictions: 401 and 403 Error Solutions

Access restrictions can silently sabotage your site’s search performance. A 401 Unauthorized error occurs when visitors or crawlers lack valid login credentials. For example, pages behind paywalls or member areas often return this status12. Meanwhile, 403 Forbidden errors happen when servers recognize the user but block access due to strict permissions—like IP restrictions or file-level security13.

Search engines can’t index pages requiring passwords. Googlebot doesn’t support authentication forms, so protected content remains invisible in results12. This creates gaps in your SEO strategy, especially if critical pages are accidentally restricted.

| Error Type | Common Causes | Resolution Steps |

|---|---|---|

| 401 Unauthorized | Missing login credentials | Whitelist Googlebot’s IPs |

| 403 Forbidden | Incorrect .htaccess rules | Update file permissions |

| 403 Forbidden | Plugin conflicts | Test with default server code |

To resolve these errors, start by reviewing server configurations. Remove authentication requirements for public pages or create crawlable versions using structured data alternatives. For WordPress sites, disable plugins temporarily to identify conflicts13.

Audit internal access rules regularly. Overly strict user roles or IP blocks often cause unintended 403 errors. Tools like cPanel’s Error Logs and Google Search Console pinpoint affected URLs quickly12. Always test changes in staging environments before deployment to avoid live-site disruptions.

Resolving Redirect Errors and Server (5xx) Issues

Redirect errors act as silent roadblocks for search engines. When URLs lead to dead ends or endless loops, crawlers abandon efforts to index your content14. This wastes crawl budget and damages rankings over time.

Best Practices for 301 Redirects

Use 301 redirects to permanently move pages without losing SEO value. For example, redirecting /old-blog to /new-blog passes authority to the updated URL14. Implement them directly in server files like .htaccess or through CMS plugins like Yoast SEO14.

Avoid chains with multiple hops. A single redirect from A → C works better than A → B → C15. Test all paths using tools like Sitechecker to ensure they resolve correctly.

Troubleshooting Server Overload and Timeouts

Server crashes (5xx errors) often stem from traffic spikes or faulty plugins. Monitor logs for patterns and optimize code to reduce strain15. Solutions like caching or CDNs distribute load, preventing downtime during peak visits15.

- Audit redirects monthly to fix broken links

- Use 301 redirects for permanent moves

- Simplify complex URL structures

Regular maintenance keeps your site accessible. Tools like Prerender.io generate static pages for crawlers, easing server pressure15. Pair this with load balancing to maintain performance during traffic surges.

Handling Duplicate Content and Proper Canonical Tagging

Duplicate content silently undermines your site’s search performance. When identical or near-identical text appears across multiple URLs, search engines struggle to determine which version to prioritize1617. This confusion splits traffic and weakens page authority, making canonical tags essential for clarity.

Selecting and Signaling the Preferred Version

A proper canonical refers to the URL you want indexed. The page proper canonical specifies this choice, while the proper canonical tag (<link rel="canonical">) implements it in HTML1618. Always use absolute URLs and place tags in the

section to avoid ambiguity.

| Term | Purpose | Implementation |

|---|---|---|

| Proper Canonical | Target URL for indexing | Logical choice based on content quality |

| Page Proper Canonical | Specific page version | CMS settings or manual markup |

| Proper Canonical Tag | HTML directive | <link> element with absolute URL |

Google may chose different canonical pages if your tags conflict or lack consistency17. For example, if product variants share descriptions, Google might index a mobile version instead of your desktop pick. Fix this by aligning tags with Google’s alternate page proper selection or using 301 redirects18.

For duplicate without user-selected cases, audit orphaned pages and thin content. Tools like SEMrush identify these issues, while self-referencing canonicals prevent accidental oversights18. Regularly check Google Search Console’s Coverage Report to spot mismatches early17.

Optimizing XML Sitemaps and Robots.txt for Crawl Efficiency

Efficient website crawling starts with two essential tools: XML sitemaps and robots.txt files. These act as GPS systems for search engines, directing them to your most valuable content while avoiding digital dead ends19.

An XML sitemap lists URLs with metadata like last modified dates, helping crawlers prioritize fresh pages20. Focus on including high-priority pages—product listings, blog posts, and service pages. Exclude redirects, 404s, and password-protected areas to prevent crawl waste1920.

The robots.txt file acts as a bouncer for crawlers. Use it to block non-essential pages like admin panels or internal search results. Always include your sitemap’s location in this file for quick discovery21.

When restructuring your site, update both files immediately. For example, one case showed 9,000 URLs excluded from a sitemap of 80,000 due to outdated entries19. Tools like Yoast SEO automatically generate updated sitemaps after content changes20.

- Generate sitemaps using Screaming Frog or CMS plugins

- Validate through Google Search Console for errors

- Submit directly via webmaster tools

Properly maintained files reduce server strain and accelerate indexing. Sites with organized sitemaps see 40% faster content discovery compared to unstructured competitors19. Regular audits ensure crawlers focus on what matters most.

Addressing Orphan Pages and Content Gaps

Unlinked pages often remain hidden in your site‘s architecture, creating invisible barriers to search visibility. Orphan pages—those without internal links—frequently emerge in large websites during redesigns or content updates22. Without pathways for crawlers or users, these pages miss opportunities to rank or drive traffic.

Spotting and Fixing Content Gaps

Tools like Screaming Frog Spider expose orphan pages by mapping your internal linking structure22. Google Search Console’s Coverage Report also highlights URLs excluded due to missing connections. Left unresolved, these pages account for 15-30% of unindexed content in complex sites22.

| Problem | Detection Method | Solution |

|---|---|---|

| Orphan Blog Post | Site crawler audit | Add to category page links |

| Unlinked Product Page | XML sitemap review | Insert in navigation menu |

| Hidden Resource | Log file analysis | Link from related articles |

Content gaps occur when your pages don’t fully answer user queries. Analyze Google Search Console’s “Search Results” section to find high-impression, low-click keywords23. For example, adding FAQ sections to service pages can capture 42% more organic visits23.

- Run monthly internal link audits using Ahrefs or DeepCrawl

- Update sitemaps after publishing new content

- Add contextual links to orphan pages from high-traffic posts

Consolidating thin content and bridging gaps strengthens your site’s authority. One e-commerce brand increased indexed pages by 65% after fixing 200+ orphan URLs22. Regular maintenance ensures every page contributes to growth.

Advanced Techniques for Analyzing Index Coverage in GSC

Mastering Google Search Console’s granular reporting transforms raw data into actionable insights. The Index Coverage report acts as a diagnostic powerhouse, categorizing URLs into four status types: Error, Valid with warnings, Valid, and Excluded24. Each tab reveals patterns search engines encounter during crawling—from server errors to accidental noindex directives.

Utilizing Detailed GSC Reports to Spot Issues

Apply advanced filters to isolate specific problems. For example, sorting by “Submitted URL not found” quickly surfaces 404 errors needing redirects24. The “Valid with warnings” tab often exposes conflicting directives—like pages indexed despite robots.txt blocks—requiring immediate reconciliation24.

Combine date-range comparisons with URL pattern analysis. A sudden spike in “Excluded” pages might indicate accidental noindex tags during site updates. One case showed 1,200 product pages blocked after a CMS plugin update25.

| Error Type | Diagnostic Clue | Action |

|---|---|---|

| Soft 404 | High bounce rate + valid status | Enhance content or return true 404 |

| Redirect chains | 3+ hops in crawl logs | Implement direct 301 redirects |

| Blocked resources | Missing CSS/JS files | Update robots.txt allowances |

Leverage the URL Inspection Tool to test crawl simulations. This reveals how Googlebot renders pages, exposing JavaScript execution failures or lazy-loaded content gaps25. Pair this with log file analysis to identify crawl budget waste on pagination loops.

“Index coverage audits aren’t quarterly chores—they’re early warning systems for ranking erosion.”

Proactive practitioners schedule monthly report reviews. Track indexed page counts alongside organic traffic trends to spot correlations. Sites resolving crawl anomalies within 48 hours see 22% faster recovery in visibility26.

- Export error lists to spreadsheets for priority sorting

- Cross-reference with analytics to assess traffic impact

- Use regex filters to batch-fix URL patterns

Conclusion

Maintaining search visibility requires precision in both strategy and execution. Technical errors like 404s, redirect loops, or improper canonical tags create barriers that keep pages out of results. Every version of your content—whether desktop, mobile, or alternate URLs—must align with clean code and structured data to avoid indexing conflicts27.

Optimizing XML sitemaps and robots.txt files remains foundational. As highlighted in this guide on fixing sitemap errors, keeping file sizes under 50MB and removing duplicates ensures efficient crawling28. Pair this with regular audits using tools like Google Search Console to spot blocked pages or slow-loading assets27.

Treat these solutions as one interconnected system. Address server errors swiftly, link orphaned pages, and validate canonical tags monthly. Sites that resolve crawl issues within 48 hours see faster recovery in rankings27.

Continuous monitoring isn’t optional—it’s essential. Schedule quarterly audits to refine URL structures and eliminate redundant parameters. With consistent effort, your technical foundation will drive lasting visibility.

FAQ

How can Google Search Console help identify indexing problems?

Why does Google exclude some pages from search results?

How do 404 errors impact SEO and user experience?

What’s the best way to manage duplicate content?

How do canonical tags improve search rankings?

Why are XML sitemaps critical for crawl efficiency?

How can server errors (5xx) harm website traffic?

What are orphan pages, and how do they affect SEO?

How do 301 redirects preserve SEO value?

What role does robots.txt play in indexing?

Source Links

- https://seranking.com/blog/common-indexing-issues/ – Common Google Indexing Issues and How to Fix Them

- https://hurrdatmarketing.com/seo-news/common-google-indexing-issues-how-to-fix-them/ – 15 Reasons Google Isn’t Indexing Your Website | Hurrdat Marketing

- https://www.pageoptimizer.pro/blog/how-to-fix-google-indexing-issues-a-complete-guide – How to Fix Google Indexing Issues: A Step-by-Step Guide

- https://sitechecker.pro/google-search-console/fix-page-indexing-issues/ – How to Fix Page Indexing Issues

- https://studiohawk.com.au/blog/understanding-search-engine-indexing/ – Understanding Search Engine Indexing

- https://www.conductor.com/academy/index-coverage/ – Find and Fix Index Coverage Errors in GSC

- https://nflowtech.com/insights/how-to-fix-indexing-issues-errors-in-google-search-console/ – How to Fix Indexing Issues & Errors in Google Search Console – NFlow Tech

- https://indexcheckr.com/resources/google-indexing-issues – Google Indexing Issues: 13 Possible Causes & How to Fix Them

- https://serpstat.com/blog/reasons-for-indexing-issues/ – The Key Reasons for Indexing Issues in SEO — Serpstat Blog

- https://www.hostwinds.com/blog/soft-404-error-what-is-it-and-how-to-fix-it – Soft 404 Error: What is it and How to Fix it | Hostwinds

- https://zerogravitymarketing.com/blog/soft-404-errors/ – Soft 404 Errors (What are They and How to Fix?) | Crawl Errors

- https://www.seoptimer.com/blog/401-vs-403-error/ – 401 vs 403 Error Codes: What Are the Differences?

- https://blog.planethoster.com/en/the-8-best-ways-to-remedy-a-403-error-in-2025/ – The 8 Best Ways to Remedy a 403 Error in 2025

- https://sitechecker.pro/site-audit-issues/broken-redirect/ – Bad URL Redirect (4XX or 5XX) How to Detect & Fix | Sitechecker

- https://prerender.io/blog/types-of-crawl-errors/ – 5 Types of Crawl Errors and How to Fix Them

- https://www.conductor.com/academy/canonical/ – Canonical URL: the ultimate reference guide on using them

- https://www.stanventures.com/blog/duplicate-without-user-selected-canonical/ – How To Fix “Duplicate Without User-Selected Canonical” Issue in Google Search Console

- https://backlinko.com/canonical-url-guide – Canonical Tags for SEO: How to Fix Duplicate Content URLs

- https://www.searchenginejournal.com/technical-seo/xml-sitemaps/ – How To Use XML Sitemaps To Boost SEO

- https://searchengineland.com/guide/sitemap – Your guide to sitemaps: best practices for crawling and indexing

- https://www.reliablesoft.net/how-to-optimize-your-xml-sitemap-for-maximum-seo/ – How to Optimize Your XML Sitemap (Tutorial)

- https://www.quattr.com/improve-discoverability/orphan-pages-how-to-find-fix-them – Orphan Page: How to Find & Fix Them Fast

- https://dxtraining.iowa.gov/how-do-i-work-google-search-console – Work with Google Search Console

- https://backlinko.com/google-search-console – Google Search Console: The Definitive Guide

- https://www.hillwebcreations.com/indexing-your-website-faster/ – Indexing your Website Faster

- https://getfluence.com/indexing-guide-ensure-articles-visible-in-google-search-results/ – Indexing guide: How to ensure your articles are visible in Google search results?

- https://outreachmonks.com/google-indexing-issues/ – 10 Common Google Indexing Issues and How to Fix Them!

- https://seranking.com/blog/fixing-sitemap-errors/ – Fixing Sitemap Errors For Better Indexing of Submitted URLs