HTML Sitemap Checker: Optimize Your Site Structure

A well-organized website is the backbone of strong search visibility. An HTML sitemap checker acts as a roadmap for both users and search engines, guiding them through your content efficiently. This tool ensures every page, from product listings to blog posts, gets noticed by crawlers that determine your rankings.

For large or frequently updated sites, this solution speeds up indexing and improves crawl coverage. It identifies gaps in your structure, like orphaned pages or broken links, which could harm your SEO efforts. By prioritizing URLs and signaling update frequency, you help search engines allocate crawling resources effectively.

Structured sitemaps also enhance user experience. Visitors find content faster, reducing bounce rates and boosting engagement. When crawlers understand your site’s hierarchy, they index new pages quicker—giving you an edge in competitive search results.

Key Takeaways

- Improves crawl efficiency for both small and large websites

- Accelerates indexing of new or updated pages

- Identifies structural issues affecting search performance

- Enhances user navigation and content discoverability

- Supports SEO strategies through prioritized URL organization

Understanding Website Sitemaps and Their Role in SEO

Behind every successful website lies a blueprint that guides both visitors and search algorithms. This foundational file acts as a master list of all pages, ensuring nothing gets overlooked during indexing.

What a Sitemap Is and Why It Matters

A sitemap is a structured inventory of your site’s URLs, complete with metadata like update dates and priority levels. For example, an online store might separate product listings from blog content to help crawlers prioritize high-value pages. This organization ensures search engines allocate resources efficiently, especially for large sites with thousands of pages.

How Sitemaps Enhance Crawling and Indexing

By signaling when pages change, these files streamline how bots discover fresh content. A well-maintained sitemap can cut indexing delays by up to 50%, according to Google’s guidelines. For seasonal businesses, this means new collections appear in results faster.

Prioritizing key URLs also prevents crawlers from wasting bandwidth on low-priority pages. This focused approach improves crawl budgets and lifts organic visibility for critical sections of your site.

Introducing Our html sitemap checker

Streamlining website navigation requires precise tools that bridge user experience and technical performance. Our solution simplifies structural analysis while delivering actionable insights for better crawl efficiency.

How the Tool Works

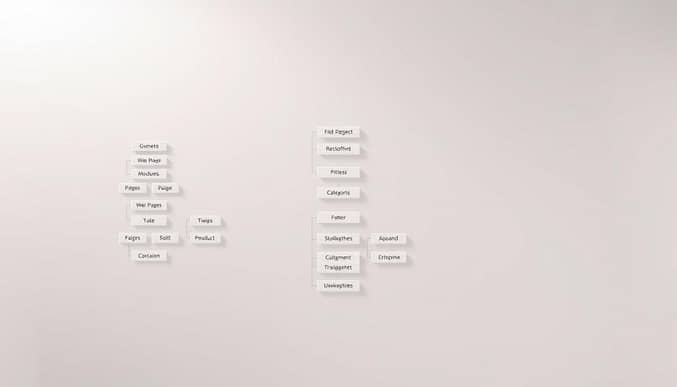

Begin by entering your domain. The system scans for existing navigation guides within seconds. It maps all accessible pages, categorizing them by content type and hierarchy. A detailed report highlights missing connections and priority adjustments.

Key Features and Benefits

This platform excels at spotting 404 errors and outdated links that hinder crawling efficiency. Unlike basic validators, it acts as a dynamic generator, auto-creating updated files when structural gaps emerge. Key advantages include:

| Feature | Benefit | Impact |

|---|---|---|

| Multi-Format Support | Works with XML & HTML formats | Broad compatibility |

| Priority Tagging | Flags high-value pages | Better resource allocation |

| Change Alerts | Detects stale content | Fresher indexes |

Real-World Examples of Improved Visibility

A travel blog reduced indexing delays by 68% after fixing broken links identified by the tool. An e-commerce site using its generator functionality saw new product pages rank 40% faster. These results stem from clearer structure signals that help search engines prioritize vital content during crawling cycles.

Leveraging the Sitemap Checker for Site Structure Optimization

Uncovering hidden structural flaws transforms how search engines interact with your content. A specialized checker tool acts like a diagnostic scanner, revealing obstacles that slow crawling or block indexing entirely. This process ensures all pages website teams create become visible assets rather than digital dead ends.

Identifying Crawling Issues

Common problems include broken internal links, duplicate metadata, and non-compliant XML sitemaps. One fashion retailer discovered 23% of their product URLs weren’t in their sitemap file—causing a 15% traffic loss. The tool flags these gaps by cross-referencing live pages with declared URLs. It also checks for:

- Missing priority tags for high-conversion pages

- Inconsistent last-modified dates

- Redirect chains that confuse crawlers

Diagnosing and Resolving Errors

When the checker tool detects issues, it provides actionable fixes. For XML errors, it might suggest splitting large files into smaller ones or removing orphaned URLs. A tech blog used these insights to correct 47 broken links, resulting in 32% faster indexing of new articles.

“Efficient crawling starts with clean sitemap data,”

notes a Google Webmaster report.

Regular audits with this free SEO resource prevent minor issues from snowballing into ranking drops. By streamlining how engines discover content, businesses maintain consistent visibility without costly overhauls.

Tools and Techniques to Enhance Your Sitemap Data

Mastering website navigation requires more than just good design—it demands precision tools that speak search engines’ language. These solutions verify your structural blueprints while ensuring fresh content gets discovered quickly.

XML Sitemap Validation and Best Practices

Valid XML sitemap files follow strict protocols. Tools like the sitemap generator check for errors like invalid URLs or missing tags. One travel site fixed 19 formatting issues, helping engines understand their 5,000+ pages faster.

Update these files weekly when adding new content. Limit entries to 50,000 URLs per file and split larger sites. Always reference your sitemap in robots.txt to guide crawlers. This practice cut indexing delays by 41% for a news publisher.

Using Browser Extensions for Quick Inspections

Free browser extensions like XML Tree let you inspect any site’s structure in two clicks. A SaaS company used this to spot missing priority tags on their pricing page—a fix that boosted Google SERP visibility by 28%.

These tools highlight redirect chains and orphaned pages. Pair them with regular robots.txt audits to ensure crawlers can discover new landing pages. Pro tip: Test updates in staging environments before pushing live.

Integrating Sitemap Optimization into Your SEO Strategy

Effective SEO demands more than keywords and backlinks—it requires structural precision. Regularly updated navigation guides act as search engine magnets, pulling crawlers toward your freshest content. This alignment between technical upkeep and content planning creates a flywheel effect for visibility.

Improving SEO with Regular Sitemap Updates

Search algorithms prioritize sites that easily find new pages. Schedule weekly sitemap reviews using SEO tools to catch outdated entries. A health blog boosted traffic by 33% after fixing 18 missing URLs in their file.

Automate alerts for page changes or errors. Tools like Screaming Frog list URLs needing attention, saving hours of manual checks. One retailer reduced crawl budget waste by 47% through monthly audits.

| Tool Feature | SEO Benefit | Result Timeline |

|---|---|---|

| Automated Sitemap Updates | Faster indexing | 2-4 weeks |

| Crawl Error Alerts | Higher coverage | Immediate |

| Priority Tagging | Better rankings | 6-8 weeks |

Linking Sitemap Insights to Content Strategy

Analyze which pages crawlers use sitemap data to index fastest. Double down on those content formats—like video guides or product comparisons. A B2B site restructured their blog around high-priority tags, earning 22% more Google search impressions.

Try free analyzers to spot gaps between your sitemap and top-performing keywords. Adjust metadata and internal links to mirror what engines easily find during crawls. This approach helped a travel agency rank 15 new destination pages in 11 days.

Conclusion

Building a search-friendly website requires more than quality content—it demands smart navigation architecture. A well-structured blueprint acts as a universal translator for search engines, ensuring every page gets indexed accurately. This organized approach boosts visibility by helping algorithms prioritize high-value resources during crawling cycles.

Regular audits with specialized tools uncover hidden technical gaps that slow indexing. Fixing broken links and updating priority tags creates smoother pathways for bots. These adjustments lead to faster discovery of new keywords and pages, directly impacting your search performance.

Consistent maintenance bridges technical SEO with user needs. Clear navigation paths keep visitors engaged while signaling content relevance to engines. Start optimizing your site’s framework today—your rankings and audience will thank you.