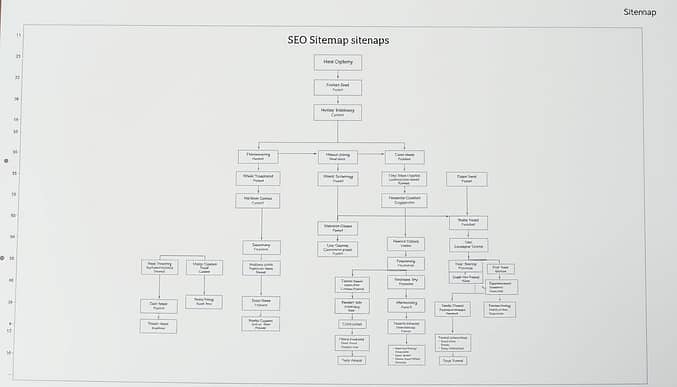

How to Create a Sitemap in Wix: A Step-by-Step Guide

Building a website is just the first step. To ensure your content reaches its audience, search engines need to understand your site’s structure. That’s where an XML sitemap comes in. Think of it as a roadmap that helps crawlers efficiently find and index your pages. While platforms like Wix handle this automatically, optimizing it can boost your SEO performance significantly.

This guide walks you through creating, submitting, and fine-tuning your sitemap. You’ll learn why these files matter for visibility and how to make adjustments even when your platform does most of the work. For example, while Wix generates and updates sitemaps by default, certain scenarios—like excluding specific pages—require manual input.

We’ll also cover tools like Screaming Frog for deeper analysis. Whether you’re new to SEO or refining existing strategies, this step-by-step approach ensures your site stays crawlable and competitive. Ready to unlock better rankings? Let’s dive in.

Key Takeaways

- XML sitemaps act as critical guides for search engines to discover and index website content.

- Wix automatically generates and updates sitemaps, reducing manual effort for users.

- Custom adjustments, like hiding pages from search results, may still require user intervention.

- Submitting your sitemap to tools like Google Search Console improves indexing speed.

- Third-party SEO tools can help audit and optimize sitemap accuracy.

Introduction to Sitemaps and Their Role in SEO

Ensuring all your web pages get noticed starts with a crucial SEO tool: the sitemap. This file acts like a GPS for search engines, mapping every corner of your digital presence. Without it, crawlers might miss pages buried deep in your structure or those not linked internally.

HTML and XML formats serve different audiences. HTML versions help human visitors navigate your site, while XML files focus solely on search engines. The latter lists URLs with metadata like update frequency, guiding bots to prioritize fresh content.

Why does this matter? Orphan pages—those without internal links—often stay hidden without a sitemap. By including them, you ensure crawlers index even hard-to-find sections. Pair this with smart internal linking, and your site’s visibility climbs.

| Feature | HTML Sitemap | XML Sitemap |

|---|---|---|

| Primary Audience | Human Visitors | Search Engines |

| SEO Impact | Indirect (User Experience) | Direct (Crawl Efficiency) |

| Content Focus | Page Hierarchy | URL Metadata |

For optimal results, integrate sitemaps into your broader SEO strategy. Tools like Google Search Console use them to identify crawl errors or outdated pages. Combined with clean navigation, they create a seamless path for both users and algorithms.

What is an XML Sitemap?

Behind every well-indexed website lies a hidden blueprint. XML sitemaps act as machine-readable directories, listing every page you want search engines to find. Unlike visual site navigation, these files speak directly to crawlers through standardized code.

Definition and Key Concepts

An XML sitemap uses simple tags to outline your site’s structure. The <loc> tag specifies page URLs, while <lastmod> shows when content changed. This helps algorithms prioritize fresh material during crawls.

<?xml version=”1.0″ encoding=”UTF-8″?>

<urlset xmlns=”http://www.sitemaps.org/schemas/sitemap/0.9″>

<url>

<loc>https://example.com/page1</loc>

<lastmod>2024-03-15</lastmod>

</url>

</urlset>

Core Elements and Structure

Every valid XML sitemap requires three components:

- A header declaring the XML version

- The

<urlset>wrapper enclosing all entries - Individual

<url>blocks for each page

| Tag | Purpose | Required? |

|---|---|---|

| <loc> | Page URL | Yes |

| <lastmod> | Update timestamp | No |

| <priority> | Crawl importance | No |

By organizing pages this way, you guide crawlers efficiently. Major platforms like Google process these files daily, ensuring new content gets spotted faster.

How XML Sitemaps Enhance Your SEO

What if search engines could prioritize your latest content? XML files act as priority signals, guiding crawlers to recent updates. By highlighting when pages change, you reduce the time it takes for algorithms to spot fresh material. This speeds up indexing and keeps your site competitive.

Crawling and Indexing Benefits

XML files streamline how bots navigate your site. Instead of relying solely on internal links, crawlers use the sitemap to locate every page. For example, a blog post updated last week gets crawled faster if its <lastmod> tag reflects the change. This efficiency minimizes missed pages and ensures critical updates don’t linger in obscurity.

Boosting Site Discoverability

Pages buried in complex menus or lacking internal links often go unnoticed. A well-structured XML file acts as a safety net, ensuring even orphaned pages get indexed. Over time, this improves your site’s authority. One study showed sites with optimized sitemaps saw 27% faster indexing of new content compared to those without.

While meta tags and headers matter, XML files provide a direct line to search engines. They’re especially vital for large sites or those with dynamic content. Pairing them with smart SEO practices creates a robust strategy for visibility.

Types of Sitemaps Explained

Choosing the right format for your website’s navigation guide depends on its size, content, and goals. Three primary options exist: XML, text, and syndication feeds. Each serves distinct purposes, balancing simplicity with advanced functionality.

XML: The SEO Powerhouse

XML files remain the gold standard for search engine communication. They support metadata like update dates and priority levels, making them ideal for large or complex websites. For example, e-commerce platforms with thousands of product pages benefit from XML’s structured data.

Text Files: Simplicity First

A basic text document lists URLs without extra details. This lightweight option works well for smaller sites or those with limited technical resources. However, it lacks customization features and can’t signal content freshness to crawlers.

Syndication Feeds: Real-Time Updates

RSS or Atom feeds automatically push new content to search engines. News outlets and blogs often use this format to ensure rapid indexing of articles. One study found that sites using feeds saw 35% faster indexing for time-sensitive posts compared to XML alone.

| Format | Best For | Limitations |

|---|---|---|

| XML | Large sites, SEO depth | Requires technical setup |

| Text | Simple sites, quick setup | No metadata support |

| RSS/Atom | Dynamic content, blogs | Less crawler priority |

While XML dominates SEO strategies, combining formats maximizes visibility. Use text files for straightforward navigation and syndication feeds for fresh content. Always prioritize your users’ experience alongside technical requirements.

Essential Elements for an Effective XML Sitemap

A well-structured file acts like a translator between your website and search algorithms. To maximize its impact, every entry must follow strict formatting rules while leveraging optional signals for better prioritization.

Mandatory and Optional URL Tags

Every valid entry requires the <loc> tag to specify page addresses. Missing this element breaks the entire file, causing crawlers to ignore your content. Optional tags add layers of control:

<lastmod>: Marks content updates for blogs or posts<priority>: Guides bots to key pages like products<changefreq>: Suggests how often pages change

| Tag Type | Function | Example Use Case |

|---|---|---|

| Required | URL location | Homepage, contact page |

| Optional | Update tracking | Seasonal product pages |

Optimizing with <lastmod> and Other Metadata

Search engines prioritize freshness. An accurate <lastmod> timestamp helps bots spot revised posts faster. E-commerce sites benefit by tagging restocked products with precise dates.

Formatting errors create roadblocks. Always use YYYY-MM-DD dates and valid URLs. Google’s sitemap guidelines show how to avoid syntax mistakes that confuse multiple engines.

Dynamic sites should automate timestamp updates. A travel blog adding daily posts could sync its CMS to refresh <lastmod> values automatically. This keeps crawlers focused on your latest content.

Dynamic vs. Static Sitemaps

Your website’s visibility depends on how efficiently search engines track changes. Two approaches dominate: dynamic and static systems. The first updates automatically when you add or edit content. The second requires manual adjustments, risking outdated information if neglected.

Why Real-Time Updates Matter

Dynamic files shine in fast-paced environments. For example, blogs adding daily posts benefit from instant updates. Crawlers detect new URLs immediately, speeding up indexing. This automation also reduces human error—no forgotten pages or typos.

Static systems, on the other hand, demand constant attention. If a product page gets removed but stays listed, search engines may index broken links. One study found sites using static files experienced 40% more crawl errors compared to dynamic setups.

| Feature | Dynamic | Static |

|---|---|---|

| Update Method | Automatic | Manual |

| Maintenance | Low effort | High effort |

| Indexing Speed | Minutes | Days |

Tools like Google Search Console prioritize fresh data. Dynamic systems feed this need seamlessly. They also simplify large-scale projects—imagine updating 500 product pages by hand versus letting software handle it.

While static files work for small sites, growth exposes their limits. Missing even one update can bury critical pages in search results. Automated solutions keep your content visible with minimal effort.

Optimizing Your Website for Search Engines

The architecture of your website acts as a silent guide for both visitors and algorithms. While automated tools like XML files lay the groundwork, internal linking and logical site structure determine how effectively search engines crawl and interpret your content.

Building Pathways for Bots and Users

Internal links act as bridges between related pages. For example, linking a blog post about “SEO Basics” to a service page for “Professional Optimization” creates context. This strategy distributes authority across your site while helping search engines discover hidden pages.

Best practices for site structure include:

- Organizing content into clear categories (e.g., “Blog > SEO Tips”)

- Limiting menu depth to three clicks from the homepage

- Using breadcrumb navigation to clarify page relationships

| Internal Linking Strategy | Impact |

|---|---|

| Contextual anchor text | Boosts keyword relevance |

| Topical clusters | Strengthens content themes |

Tools like Bing Webmaster Tools provide crawl reports to identify gaps. If bots struggle to reach key pages, adjust your linking strategy. Pair this data with heatmaps to ensure user-friendly navigation.

Actionable tips:

- Audit links quarterly to fix broken connections

- Prioritize pages with high traffic potential

- Use nofollow tags sparingly to guide crawlers

A cohesive structure helps search engines index efficiently while keeping visitors engaged. Combined with technical SEO, these elements form a foundation for sustainable growth.

Sitemap Wix and Its Automatic Integration

Modern website builders streamline SEO tasks through smart automation. When you publish a new site, the platform instantly creates a comprehensive XML file listing all pages. This document lives at /sitemap.xml, serving as a master directory for algorithms.

How Automatic Generation Works

The system updates your file whenever content changes. New blog posts or product listings appear within minutes. Separate indexes manage different content types—events, galleries, or stores—ensuring precise categorization.

Integrated tools submit these files directly to sitemap search engines. You’ll find them in your dashboard’s SEO settings. This eliminates manual submissions and keeps crawl index pages current.

Tailoring Your Setup

Need to exclude sensitive pages? Use the blocked robots .txt editor to hide URLs from crawlers. The platform also lets you:

- Prioritize key sections with custom metadata

- Monitor performance via site search console links

- Fix broken links detected during automated scans

Follow this checklist for optimal results:

- Verify domain ownership in Google’s tools

- Submit your XML file to site search console

- Review crawl index pages reports monthly

- Update blocked robots .txt rules quarterly

These steps ensure your content stays visible to sitemap search engines while maintaining control over private areas.

Generating a Sitemap with SEO Tools

When automated solutions fall short, advanced SEO tools step in to fill the gap. Third-party software like Screaming Frog offers granular control over XML sitemaps, especially for sites requiring custom setups.

Using Screaming Frog for Manual Generation

This desktop tool crawls your entire domain like search engines crawl index pages. It compiles every URL into a structured list while respecting robots.txt rules. Pages marked “noindex” or blocked by directives automatically stay off the final file.

Here’s how it works:

- Enter your domain into the spider tool

- Filter results to exclude non-essential pages

- Export the cleaned list as an XML sitemap

Dynamic sites benefit from scheduled crawls. Set weekly scans to capture new product pages or blog posts. Host the generated file on your server and submit it directly to search consoles.

| Feature | Benefit |

|---|---|

| Custom Filters | Remove duplicate or low-value pages |

| Bulk Export | Generate multiple sitemaps for large sites |

Platforms with built-in automation (like Wix website systems) still require manual checks. Tools like this ensure no critical pages slip through algorithmic cracks.

Submitting Your XML Sitemap to Major Search Engines

Getting your website indexed starts with telling search engines where to look. Submitting your XML file directly to platforms like Google Search Console ensures bots prioritize crawling your content. This step bridges the gap between your site and organic visibility.

Google Search Console: Submission and Feedback

First, verify site ownership through DNS records or HTML file upload. Navigate to the Index section and select Sitemaps. Enter your sitemap URL (usually /sitemap.xml) and click Submit. The dashboard will show “Success” status or errors like blocked URLs.

Common issues include:

- 404 errors from deleted pages

- Blocked resources in robots.txt

- Incorrect URL formatting

Bing Webmaster Tools and Other Platforms

Bing’s process mirrors Google’s. Add your site, verify ownership, and submit the XML file under Configure My Site. Yahoo uses Bing’s data, while Yandex requires separate submissions through its webmaster portal.

| Platform | Submission URL | Verification Method |

|---|---|---|

| /sitemap.xml | DNS or HTML file | |

| Bing | /sitemap.xml | Meta tag or XML file |

| Yandex | /sitemap.xml | DNS or email |

Resubmit your file after major updates to accelerate recrawling. Tools like search console track indexing progress, showing how many pages appear in google search results. Fix errors promptly to maintain optimal crawl index rates.

Updating and Validating Your Sitemap

Keeping your site’s directory accurate requires regular attention. Search engines rely on up-to-date XML files to crawl efficiently, but errors can derail indexing. A proactive approach ensures your pages stay visible and competitive.

Smart Validation Strategies

Google Search Console offers real-time feedback on your XML file’s health. Navigate to the Coverage report to spot issues like 404 errors or blocked pages. Third-party tools like XML-Sitemaps Validator check syntax compliance, ensuring your code meets search engine standards.

Common problems include:

- URLs restricted by robots.txt rules

- Incorrect date formats in

<lastmod>tags - Duplicate entries skewing crawl budgets

| Tool | Function |

|---|---|

| Google Search Console | Identifies indexing errors |

| Screaming Frog | Audits internal links |

| Online Validators | Checks XML structure |

To fix robots.txt conflicts, review directives blocking key pages. Adjust rules to allow crawlers access while keeping sensitive areas private. For example, if your login page is accidentally blocked, update the file to exclude only admin sections.

Schedule monthly audits using automated platforms like Ahrefs. Set alerts for sudden drops in indexed pages or crawl errors. Pair this with manual checks after major site updates—like launching a new product category or submitting your sitemap and URLs directly to search consoles.

Consistency matters. Establish a routine to verify metadata accuracy and URL statuses. This prevents minor issues from snowballing into ranking drops.

Conclusion

A well-structured website roadmap ensures search engines find and prioritize your content effectively. By mastering sitemap creation and updates, you guide crawlers to pages that matter most. Platforms with automated tools simplify this process, but strategic tweaks—like excluding outdated pages—keep your listings sharp.

Regular audits using tools like Google Search Console help maintain accuracy. These checks ensure your XML files reflect current content while fixing crawl errors quickly. For those using streamlined sitemap management features, balancing automation with manual oversight maximizes visibility.

Apply the steps outlined here to improve indexing speed and user navigation. Test different metadata tags, submit updated files to search engines, and monitor performance metrics. This proactive approach keeps your site competitive in rankings.

Remember: a dynamic, well-maintained sitemap isn’t optional—it’s essential for sustainable SEO success. Start optimizing today to unlock your website’s full potential.