Fixing Large DOM Size: Best Practices for Web Developers

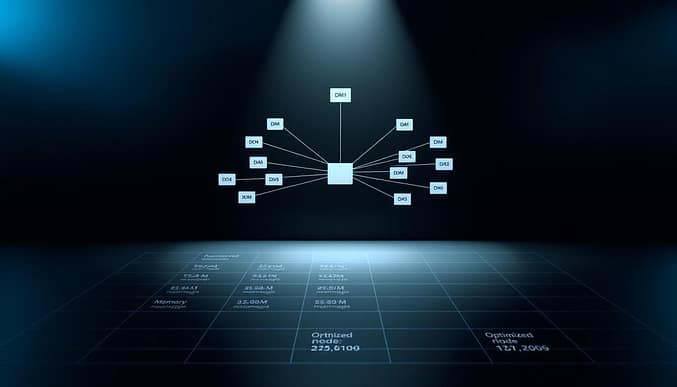

The Document Object Model (DOM) acts as the backbone of every web page, translating HTML into a structured hierarchy browsers use to render content. When this structure grows too complex, it can drag down page performance, creating sluggish interactions and frustrating delays for users.

Excessive nodes in the DOM—like nested elements or redundant components—force browsers to work harder during rendering. This not only increases loading time but also strains device memory, especially on mobile. Tools like Google Lighthouse audits flag these issues, warning developers when node counts surpass recommended thresholds.

Why does this matter? A bloated DOM impacts real-world usability. Pages take longer to become interactive, and dynamic updates—like filtering a product list—may stutter. Even minor tweaks to the structure can trigger costly re-renders, eating into processing power.

Key Takeaways

- The DOM organizes a page’s structure, but complexity slows rendering.

- High node counts increase load times and drain device resources.

- Real-world impacts include laggy interactions and memory overload.

- Audit tools like Lighthouse identify excessive DOM depth.

- Streamlining the structure improves responsiveness across devices.

In the following sections, we’ll explore actionable strategies to simplify DOM trees while maintaining functionality. These techniques balance technical efficiency with seamless user experiences.

Understanding the Impact of a Large DOM on Web Performance

When you visit a website, the browser builds a Document Object Model (DOM) to represent and interact with the page’s structure. This hierarchical framework organizes every button, image, and text block into a tree-like layout that dictates how content appears on screens.

Defining the DOM and Its Role in Rendering

The DOM consists of nodes—individual components like headings or lists—and elements, which are specific types of nodes with attributes. For example, a navigation menu might contain 50 nodes due to nested links and icons. Browsers parse HTML line by line, converting tags into this interactive tree.

Complex structures force browsers to work overtime. Pages with over 1,500 nodes often see 300ms delays in rendering. Mobile devices struggle most, as memory limitations amplify slowdowns.

How Excessive DOM Size Affects User Experience

Too many elements create bottlenecks. Scrolling may stutter, forms lag, and animations freeze. Research shows pages exceeding 800 nodes increase bounce rates by 15% on average.

Each update to the DOM triggers recalculations. A product filter reloading 100 items instead of 20 adds seconds to interaction times. Streamlining this tree ensures smoother transitions and faster responses.

By prioritizing lean structures, developers prevent these issues before they frustrate users. The next sections will reveal practical methods to achieve this balance.

Warnings and Diagnostics: Avoid an Excessive DOM Size

Modern performance tools like Google Lighthouse act as early warning systems for overloaded web structures. When audits flag “Avoid an excessive DOM size”, it signals hidden inefficiencies that degrade user interactions.

Decoding Performance Alerts

Lighthouse evaluates two critical metrics: total nodes (over 1,500 triggers warnings) and maximum depth (beyond 32 nested layers). These thresholds reflect real-world limits—browsers struggle to process complex trees efficiently.

Pages breaching these limits often suffer 20-40% slower loading speeds. Each additional node forces browsers to recalculate layouts and styles during updates. Dynamic features like live filters or infinite scroll become sluggish, especially on older devices.

While some developers ignore alerts that don’t lower Lighthouse scores, unresolved DOM issues compound over time. A Lighthouse DOM audit reveals exact problem areas, such as deeply nested menus or redundant wrappers.

Prioritize alerts showing high node counts or excessive depth. Simplifying these sections first yields immediate responsiveness gains. Regular monitoring prevents gradual bloat as sites evolve.

Effective Techniques for Fixing Large DOM Size

Overloaded web architectures create invisible roadblocks for users and search engines alike. Strategic optimizations can transform sluggish pages into responsive experiences while keeping core functionality intact.

Audit Third-Party Add-Ons

Plugins and themes often introduce hidden bloat. Remove unused widgets and replace poorly coded tools with lightweight alternatives. For example, Elementor 3.0 reduced default nodes by 40% compared to earlier versions.

Refine Code Efficiency

Unoptimized CSS and JavaScript generate redundant elements. Combine files, eliminate unused styles, and implement lazy loading. This trims the number of nodes browsers must process during initial rendering.

| Strategy | Benefit | Tools |

|---|---|---|

| Plugin Cleanup | Removes 20-50% orphaned nodes | Lighthouse, Chrome DevTools |

| Code Splitting | Reduces render-blocking resources | Webpack, Critical CSS |

| HTML Simplification | Cuts depth by 3-5 layers | HTML Validator, axe DevTools |

Re-Architect Page Layouts

Flatten nested structures by replacing div soups with semantic HTML. Avoid display:none for hidden content—use conditional loading instead. As DOM optimization experts recommend, splitting long pages into focused sections often yields better results than single-page designs.

Regular audits combined with incremental changes create compounding performance gains. Sites maintaining under 800 nodes typically see 1.5x faster interaction times, directly boosting user retention.

Conclusion

A streamlined DOM structure isn’t just technical jargon—it’s the difference between a site that converts and one that drives users away. Overloaded pages with thousands of nodes drain device memory, delay rendering, and frustrate visitors. As highlighted in our DOM optimization guide, common culprits like outdated plugins or bulky page builders often hide unnecessary elements.

Prioritizing lean code pays dividends. Trimming redundant wrappers, simplifying nested layouts, and auditing third-party scripts can slash node counts by 30-50%. This directly improves loading time and interaction smoothness, especially on mobile devices where resources matter most.

While Lighthouse warnings about excessive DOM size don’t always affect scores immediately, they signal deeper issues. Sites maintaining under 800 nodes see fewer reflow calculations and faster content visibility. Tools like Chrome DevTools make it easy to spot bloated sections—like tables with 100+ rows or deeply nested menus.

Regular checks prevent gradual complexity creep. Pair automated audits with manual reviews of dynamic components. The result? Pages that load quicker, retain visitors longer, and climb search rankings. Start today: every node removed is a step toward frictionless user experiences.